Encoder-Decoder

ChatGPT is one half of the puzzle

In my last article, I covered the dynamic aspect of attention and how transformers use these attention layers to make sense of text.

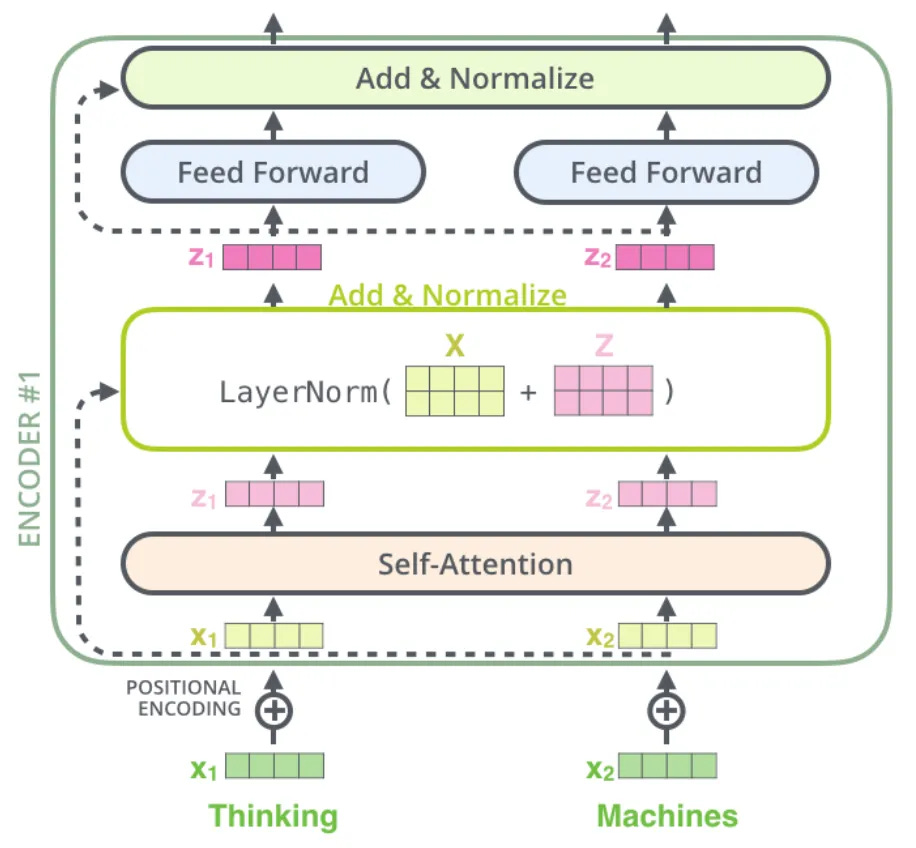

This diagram covers a forward pass, and shows the encoder only.

There is another side of the puzzle—the decoder architecture—and this is what ChatGPT is. ChatGPT, released in 2022, has had much fanfare and results. It supposedly has a billion users and is launching a search tool. But it has limitations that are fundamental to its architecture.

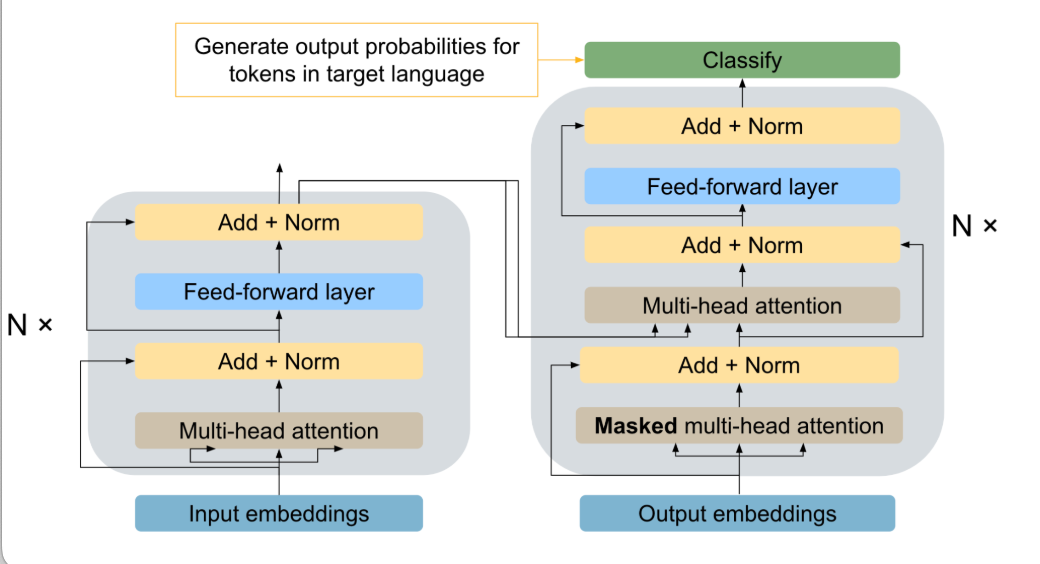

Below is the full transformer architecture, with both encoder and decoder. You can see that the decoder is “attending” to the normalization layer of the encoder, and moving that through its various layers, including attention, normalization, feed-forward, and a final classification layer that generates output probabilities through softmax.

What’s wild here is that ChatGPT has essentially cleaved half of the architecture and gotten great results. It’s not a panacea and is still limited. No encoder means looking at the previous tokens is difficult.

Let’s say you mask part of the input. An encoder / BERT will bidirectionally search both sides of the masked token and can deal with this well out-of-the-box. However, you’ll need to fine-tune GPT to do this, because it will continually work with text and think “next token, next token, next token” instead of building internal representations of the input tokens.

You can see where I’m going here. An encoder, on the left, will (theoretically) find a machine-level meaning of the words. GPT (the general pre-trained transformer) is fundamentally limited. It can only decode the world; it cannot encode the world into itself. Imagine you making constant predictions, writing beautifully for pages.

But you are in some museum behind thick glass, constantly observing, never truly touching or interacting. You’ll see the humans drop new fishes or feed into the aquarium, and give you better tools to see more fish, but when you try to touch the fish or really feel water, you can’t.

You can’t because you don’t have access to the left side. You can’t build your understanding of water because you can’t encode it. Maybe this is sad, but this analogy is true. If you are to outgrow this analogy, we will have to break the glass. We will have to allow you to see the world and encode it.